...or with 2.0, you get Snapshots.

While Asymmetric Expansion is cool, and more than a little revolutionary, and NFSoRDMA brings new levels of performance to the venerable NFS protocol - as far as I’m concerned, the biggest new feature in VASTOS 2.0 is the addition of snapshots.

Over the past decade or so, storage snapshots have become a key part of most organization’s operations. Snapshots provide everything from short-term data protection during a database schema upgrade to consistent sources for backups as a painless way to create multiple logical copies of everything from databases for developers to front-end web-server containers.

As a startup with a mission to create a universal data platform, snapshots have always been part of VAST Data’s grand design, and with 2.0, our users can start taking advantage of them. Our product philosophy is based on breaking the trade-offs created by conventional solutions, so it should come as a surprise to no-one that VAST takes a fresh approach to snapshots and our architecture choices have some intriguing implications.

All Snapshots Are Not Created Equal

While almost every modern data store today supports the ability to take snapshots of data in one way or another, the underlying technologies they use vary significantly.

The first generation of snapshots used copy-on-write technology. When data was overwritten, they copy the now old version of the data to a log-file, or dedicated snapshot area. This causes I/O amplification because snapshotted data must be written multiple times as snaps are taken - resulting in both a decrease in storage performance and unnecessary wear to storage media - which is particularly bad for modern QLC Flash media.

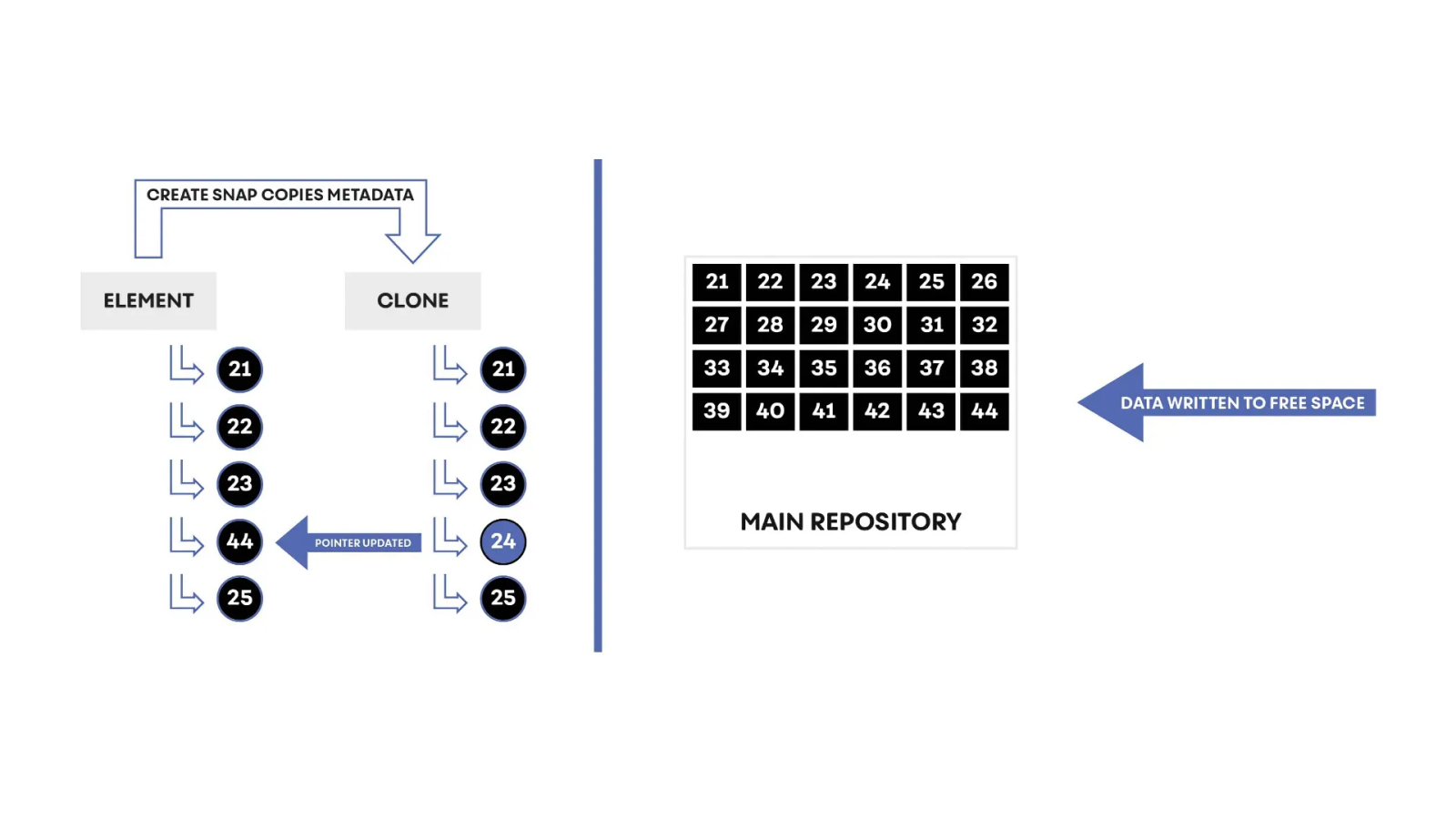

More modern, snapshot providers, typically built into metadata-centric storage systems; redirect logical overwrites into some free space within the storage system and use metadata pointers to differentiate between the snapshot and active volume. This redirect-on-write approach eliminates the performance hit and the wear that comes from a copy-on-write approach. The best of these systems can operate with multiple active snapshots on a volume without any noticeable performance impact.

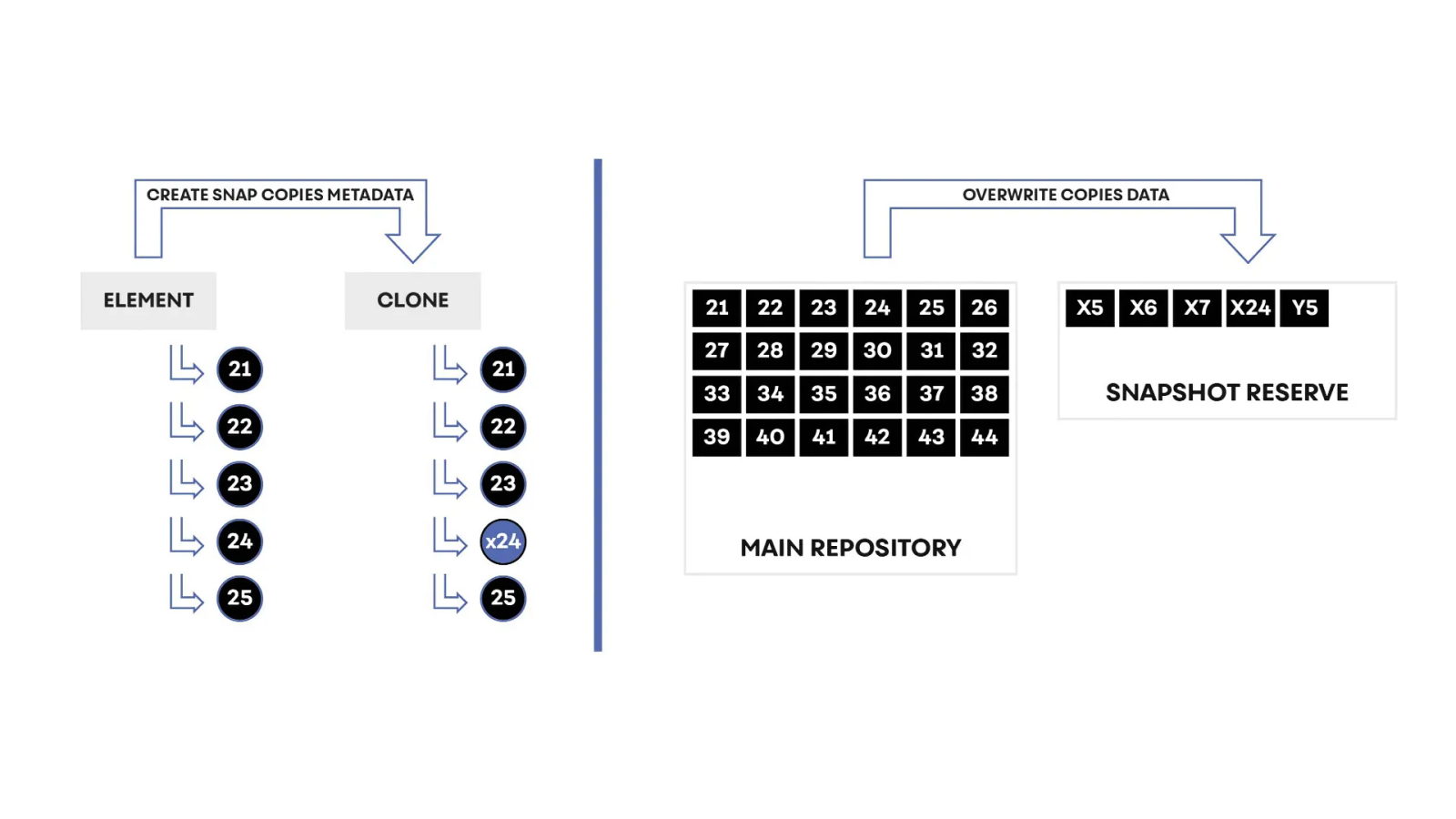

The latest approach is used in indirection based systems that always write data in free space. They create snapshots by cloning the metadata for a volume. Since the metadata for a large volume is itself large; creating, deleting and managing snapshots on these indirect-and-clone systems can be high-overhead events that cause a lot of I/O.

VASTsnaps Start With Indirection

The first key to understanding the utilitarianly named VASTsnap technology behind VAST's snapshots is to remember that the VAST Element Store performs all writes by indirection. Rather than overwriting data in place, the Element Store writes data to free space on Storage Class Memory SSDs and builds metadata pointers associating the logical location that the new data was written to with its dynamic physical location. When the system later reduces, erasure-codes, and migrates that data to free space on QLC flash SSDs, it updates the metadata pointers.

If we oversimplify a little, an element (file, object, folder, symlink, etc.) in the VAST Element Store is defined as a series of pointers to the locations on Storage Class Memory or flash where the data is stored. A snapshot is simply a different collection of pointers - not to the current contents of the element - but to the contents of the element at the time the snapshot was taken.

Building Snapshots Into the Metadata

Previous snapshot technologies were based on making copies of data, and more recently the metadata that defined the object(s) being snapped. On most systems, this creates a flurry of activity whenever a snapshot is created or deleted as the snapshot is unwound.

When we were designing the VAST Element Store, we decided we wanted to avoid all the mistakes we’d seen previous snapshot providers make...so we decided our system would:

Not force the administrator to manage snapshot space/reserves. Snapshots use any free space

Perform zero copies of data for snapshots

Have negligible performance impact with an arbitrary number of snapshots active

Have minimal performance impact at snapshot creation and deletion times

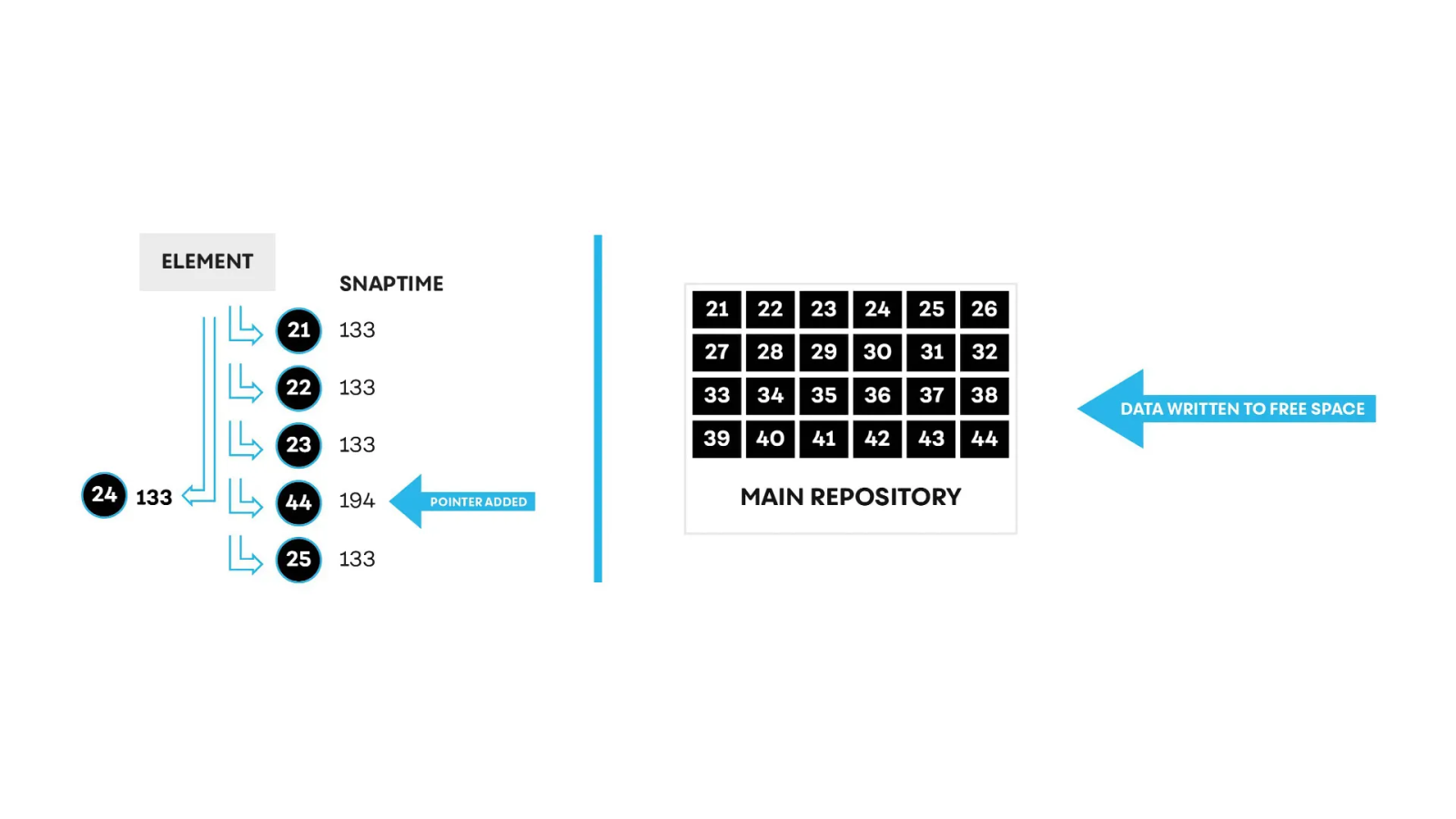

Instead of making clones of a volume’s metadata every time we create a snapshot, VAST snapshot technology is built deep into the metadata itself. Every metadata object in the VAST Element Store is time-stamped with what we’ve dubbed a snaptime. The snaptime is global system counter that dates back to the installation of a VAST cluster and is advanced synchronously across all the VAST Servers in a cluster.

Just like data, metadata in the VAST Element Store is never directly overwritten. When a user overwrites part of an element; the new data is written to free space on Storage Class Memory SSDs. The system creates a pointer to the physical location of the data and links that pointer into the V-Tree metadata that defined the object.

When that user reads the file back, the system will use the pointer for that part of the file with the highest snaptime and ignore the older versions.

Since each metadata object is time-stamped, the system doesn’t have to copy or clone any metadata to create the snapshots. Data is continuously time-stamped whether there are active snapshots or not.

When a user accesses data through a mounted snapshot, the system will search the system metadata for the objects/pointers with the highest snaptime less than or equal to the snapshot time instead of the object/pointers with the highest overall snaptime.

In the figure above the 4th extent of an element that was originally saved at snaptime 133 has been overwritten at snaptime 194. The system wrote the new data to block 44 and linked the pointer to that block to the element with the 194 timestamp.

When a user schedules a snapshot the system preserves the metadata with the highest snaptime before the snapshot time until its retention period ends. Neither creating nor maintaining the snapshot causes the system to copy data, copy metadata, or perform any significant work at all.

Metadata with obsolete timestamps not needed by any snapshots and the data that metadata points to is removed by background housekeeping tasks as data migrates from Storage Class Memory to QLC.

System-Wide Consistency

Since the snaptime manager runs synchronously across the entire VAST Cluster, all the snapshots taken for a given snaptime across a cluster are always consistent. Each metadata object is stamped with the timestamp that was current when the transaction creating that object was initiated. This ensures that for any snaptime, the system is consistent with each transaction posted in full.

Unlike legacy approaches where data is organized by volumes and data stores aren’t global - global snapshots with VAST are easy to capture. There’s no need to create consistency groups or follow your storage vendor’s complex rules for placing volumes in a consistency group that has some local snapshot limitation.

When you schedule snapshots for multiple elements hourly at xx:00 all those snapshots will be consistent.

The Benefits of Automatic VASTsnaps

The VAST Element Store timestamps every data and metadata update with a snaptime, which increments approximately once a minute. VASTsnaps use these snaptimes to view a data as of a preserved snaptime.

VASTsnaps are essentially, queries to the Element Store metadata, they don’t cause the system to copy data, or metadata and have a negligible impact on performance, and because they perform no copies QLC endurance.

All the VAST Servers in a cluster share the metadata through VAST’s disaggregated, shared everything design allowing any VAST Server to present the data of any snapshot by simply querying the system metadata without needing to copy or clone.

VASTsnaps Benefits:

No snapshot reserve or space allocation

Zero copies. Snapshots never cause data to be copied

System-wide consistency

Very low metadata overhead on writes

Low read metadata overhead

I think you’ll agree that with this first version of VASTsnaps we’ve avoided the big mistakes previous snapshot approaches have made. If you think real hard, and squint just a little out your non-dominant eye you may see that the snaptimes on all that metadata could lead to some other interesting uses we’ve thought of them too, stay tuned for future announcements.