As part of our 2.0 release, we are happy to announce that VAST Data Platform clusters can be expanded asymmetrically. Scale-out storage cluster expansion, in and of itself, is nothing revolutionary – but asymmetry changes everything, and all-Flash makes asymmetry possible.

To understand what we’re doing, let’s jump into the wayback machine and review the various ways that storage has been architected in the world before VAST:

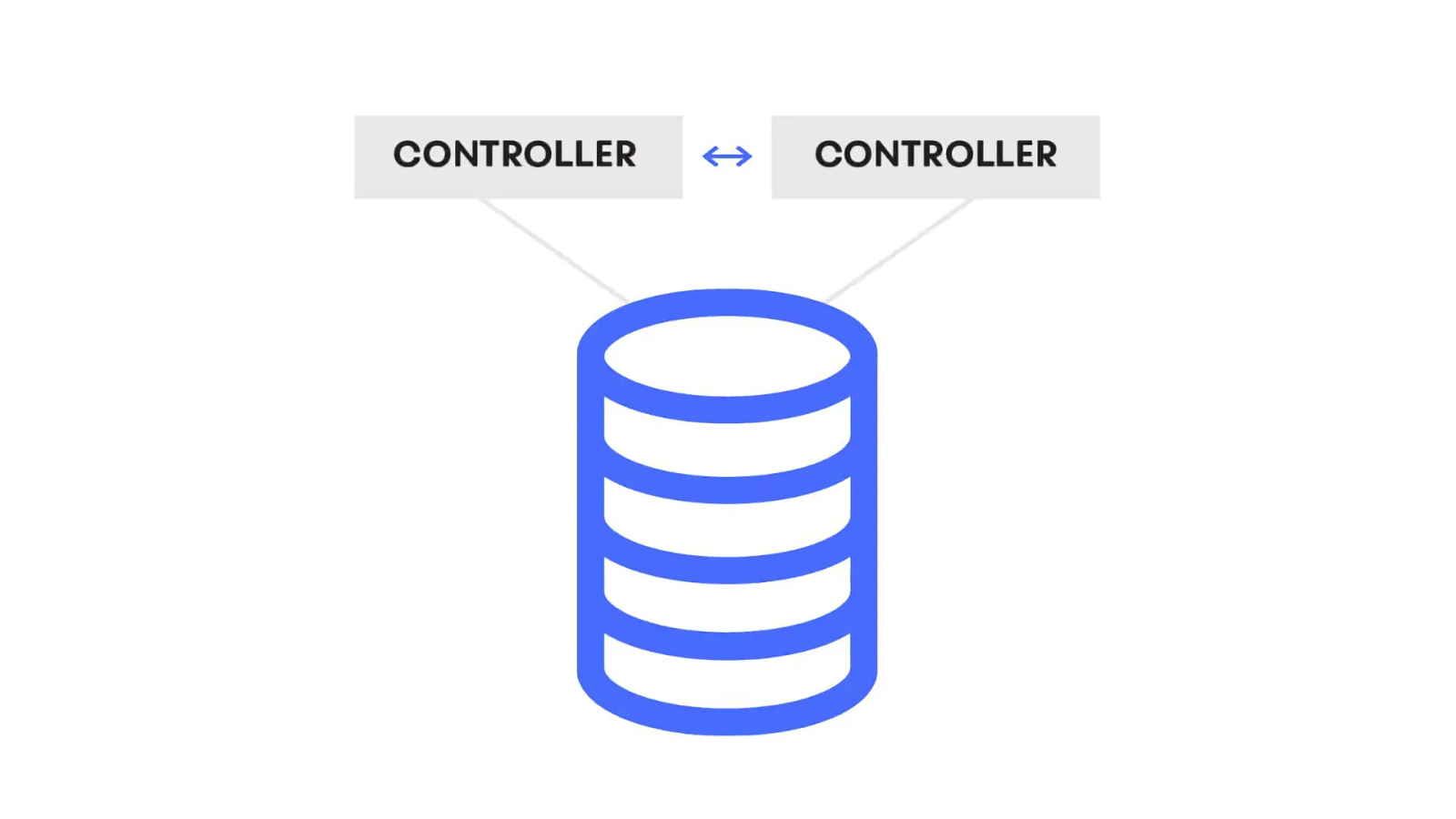

Dual Controller-Based Architectures

Servers: In the case of the classic dual-controller architecture, failover must be done between two servers that have equal capability in order to preserve service quality during failure.

Storage: Data within a tier is striped across a group of homogeneous drives in order to preserve stripe performance and make it possible to fail drives easily. Since the biggest failed drive must have enough space to rebuild across a pool of like drives, systems vendors option to make all drives the same size and eliminate rebuild uncertainty.

Clustered Controllers

Servers: In the case of a more enterprise many-controller architecture, controllers still own an explicit collection of drives and are grouped into failover pairs within a larger cluster – as to preserve IO consistency across cluster operations, all servers must be homogenous even when dealing with a cluster of dual-controller pairs.

Storage: The storage challenge here is the same as the dual-controller problem, for all the same reasons. The only way to solve the heterogeneity problem is to create tiers of different storage technologies.

Parallel File Storage

Servers: Parallel file systems are often built using the legacy controller-pair paradigm – where file servers are layered upon the controllers to translate block protocols into file access that’s managed by some independent metadata manager. In this topology, server and controller resources are essentially ‘fixed’, where more computing resources can’t be added to controllers and file servers + controllers must be grouped into like pools of scalable units such that there’s no slower entity that would drag down the performance of any parallel file operations.

Storage: Just as with servers and controllers, storage devices cannot be mixed within a pool or tier of storage because the slowest disk will drag down the performance of any parallel operation (essentially: Amdahl’s law).

Shared-Nothing System

Shared-nothing storage systems consist of servers that have direct-attached storage within the node. Some nodes are configured to provide a high CPU/disk ratio in order to handle IOPS and metadata operations, others have lots of capacity per CPU - these are often used for archival datasets. Data is striped across servers for data protection.

Since servers cannot be de-coupled or scaled independently from disks and since disks are often the unit of data protection, shared-nothing architectures discourage the use of heterogenous servers and drives within a storage pool and lock customers into a rigid price/performance envelope at the time of node purchase.

4 styles of storage systems; 3 common problems:

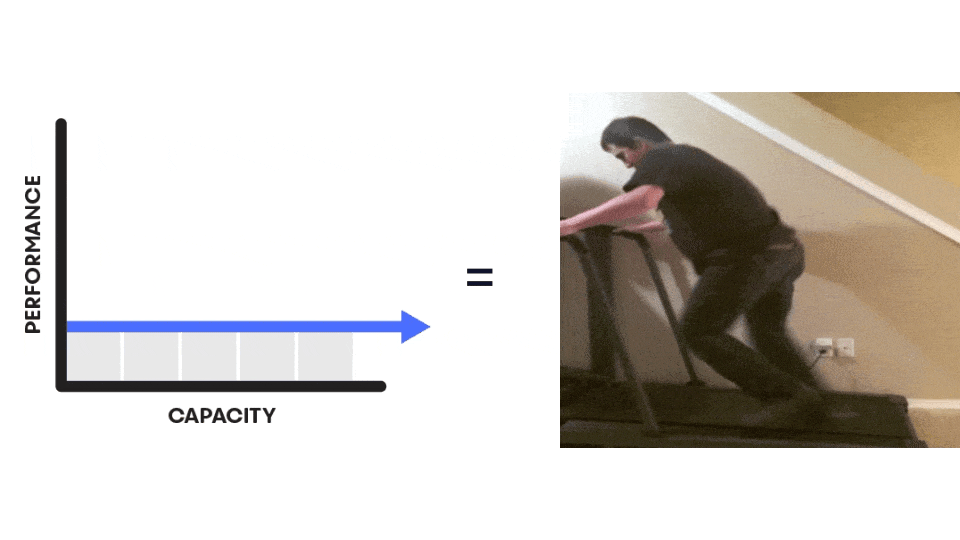

Compute for legacy storage architectures needs to be homogenous at least within a storage pool or storage tier because systems need to provide a consistent performance experience in cases of failover as well as for parallel I/O operations

Disks need to be of a similar ilk within a stripe group because performance needs to be consistent across drives and failure recovery mechanisms need assurances that the remaining disks have sufficient capacity to rebuild what has failed within some rigid stripe set

Therefore, each incremental controller or storage addition into a storage cluster is typically a forklift upgrade on the existing cluster investment or the addition of another tier of infrastructure that just creates data silos and migration hassles

Wouldn’t it be great to just add FLOPS when applications need CPU resources, and capacity when the system needs to hold more data… and to never have to worry about rightsizing a rigid node specification or forklift upgrades of storage tiers/pools?

Enter: VAST’s DASE Asymmetric Architecture

We’re happy today to announce that VAST clusters now feature independent expansion of both VAST’s stateless storage servers as well as NVMe storage enclosures. As mentioned, the expansion itself is not new… but as we peel back the curtain, you will see that VAST’s architecture decisions eliminate decades of compromise that customers have dealt with because of legacy storage limitations.

The path to asymmetry is paved with a few architectural advantages that VAST enjoys, I’ll remind you of here:

NVMe over Fabrics: this revolutionary new storage protocol features the latency of NVMe DAS and the scale of data center Ethernet or InfiniBand. With NVMe over Fabrics (NVMe-oF), VAST has disaggregated the logic from the state of its VAST Data Platform cluster architecture by using NVMe-oF as an internal cluster transport. By building a new global data layout, each CPU shares shared access to all of the cluster’s NVMe QLC drives and NVMe XPoint drives as if they were all direct-attached to every CPU. Now, CPUs can be added independently from clusters.

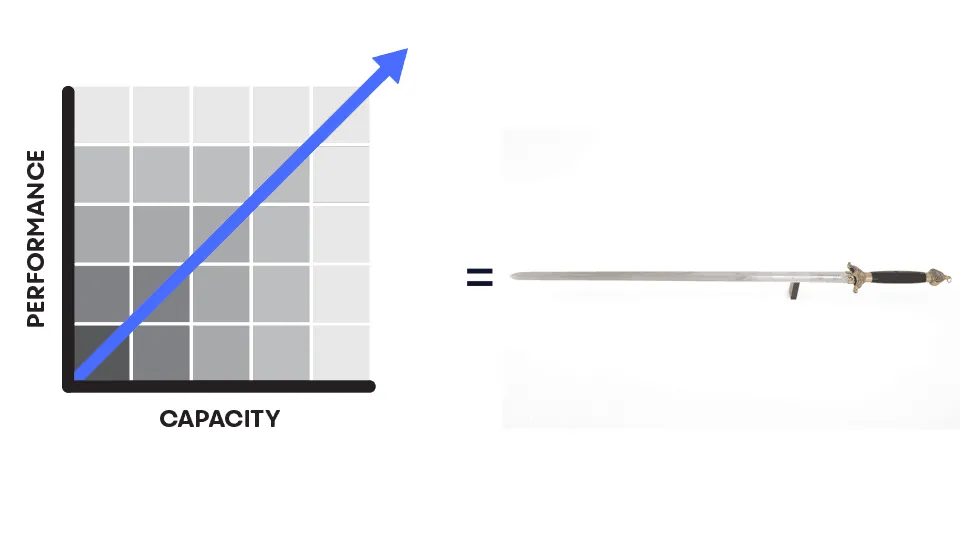

(NVMe) Flash: Of course, NVMe drives make it possible to connect remote drives via this NVMe Fabric and that’s the key to disaggregation… but Flash, in general, provides a much more significant advantage for the VAST architecture by breaking the long-standing tradeoff between performance and capacity that has always plagued legacy architectures that were born in the HDD era. The reason is this: as you think about the long-term evolution of a disaggregated, scalable all-Flash system… as storage grows denser, it will also grow proportionately in terms of performance… such that you can think of an asymmetrically scaled Flash architecture as ultimately and intrinsically well-balanced over time. It's like a perfectly-balanced sword.

The Evolution of Mechanical Media

The Evolution of Silicon Storage

So – the VAST architecture was designed at a time that enabled new thoughts on the asymmetric composition of components. This is only ½ the battle, in order to take advantage of these new degrees of freedom – the VAST engineering team needed to decouple itself from rigid hardware constructs and virtualize as much as possible to enable intelligent scheduling of system services and data placement. Let’s look at this one-by-one:

Servers:

VAST’s microservice-based architecture enables any stateless storage container to access and operate on any Flash or Storage Class Memory device. Storage tasks are allocated globally according to system load… which makes it possible to allocate tasks such as data reduction or data protection to servers that experience the least I/O load.

VAST’s global load scheduler is resource aware, assigning tasks to VAST Servers in the cluster based on their availability. As you add more powerful VAST Servers to a cluster, those servers automatically take on a larger portion of the work in proportion to the fraction of total CPU horsepower they provide in the cluster. Your new and old servers work together, each pulling its weight, until you decide they should go.

Storage:

Unlike classic storage systems that manage data and failures at the drive level, VAST’s architecture manages data at the Flash erase block level – a much smaller unit of data than a full drive. Data and data protection stripes are simply virtualized and declustered across a global pool of Flash drives and can be moved to other drives without concern for their physical locality.

Since the system doesn’t think in “drive” terms, as new drives come into the cluster – the system simply views the new resources as more capacity that can be used to create data stripes.

When larger SSDs are added to a cluster those SSDs will be selected for more data stripes than the smaller drives so the system will use the full capacity of each drive.

Now – of course, as Howard points out in his terrific blog post about Data Protection, the data protection schemes are drive-aware as to not co-locate more than one erase block from any write stripe onto one drive, thereby preserving the ability to handle multiple simultaneous drive failures within any stripe.

Because Flash gets faster and denser over time, this leads to a natural balance that is created as more heterogeneous storage resource is added to the cluster over years of operation. As your data sets grow over time, your ability to compute on this data grows proportionately and you don’t need to worry about cluster imbalance since the average ratio of IOPS and GB/s will continue to grow proportionately with the amount of TBs you can source from a Flash device.

A picture says 1,000 words:

Cluster Asymmetry - Summing it all up.

VAST has engineered a new storage system architecture that eliminates the pains of scaling out storage resources over time. There’s no longer a need to isolate data into islands of performance or capacity storage because it now possible to scale elegantly in ways that were previously unthinkable.

By disaggregating storage logic from storage capacity and enabling system processes to be globally scheduled across evolving storage container HW and evolving storage media – customers can unify their incremental storage investments and scale their applications easier than ever.

Add infrastructure only in increments that you need, since future HW is additive to the global resource pool without being concerned for storage pooling or tiering downside.

No forklift upgrades needed… just keep scaling.

Just scale stateless CPUs when performance is needed, scale storage only when capacity is required. Never scale storage when you just need CPUs, or vice versa.

Monetize your HW investments over 10 years and age infrastructure out of an ever-evolving cluster, only when it no longer supports your data center power/cooling requirements, thanks to VAST’s Zero Compromises Guarantee – including 10 years of HW protection and 10 years of fixed maintenance pricing.