As the modern data science stack continues to evolve, the motivations that previously drove organizations to adopt the Hadoop Distributed File System (HDFS) have evolved... as have the dependencies that chained customers to HDFS over the past decade.

The Rise of HDFS

To understand how things have evolved, it’s first important to understand why the universe centered on HDFS to begin with: In 2003, Google published a seminal white paper detailing the architecture of a next-generation storage architecture that consisted of commodity storage nodes that they called ‘shared-nothing’. The idea at the time was simple: since drives were faster than network adapters, it was then possible to create shared clusters of servers with direct-attached storage devices where the compute was, in essence, brought to the media. This made sense for the batch-oriented workloads where a MapReduce style of programming could chew through data on HDDs in parallel. This convention gave rise to Hadoop as both a file system as well as the Hadoop mapreduce programming model, now all often rolled into a general term called Hadoop, that was invented in 2006… all inspired by the Google File System.

The Fall of HDFS

Fast forward to 2021, the big data landscape looks very different than it was in 2006, and the fundamental principles behind shared-nothing architectures are no longer valid.

The Move to Real-Time Kills MapReduce

The big data community has largely evolved away from the MapReduce paradigm in favor of real-time computing in memory using tools such as Apache Spark.

Networking Innovation Transforms the Paradigm

20 years ago, networks were 1/400th the speed of what they are today. Now that it’s possible to ship the data to the computer (as opposed to the HFDS DAS model), analytics frameworks have disaggregated compute from storage and compute machines enjoy fast, real-time access to massive all-flash data sets at DAS speed.

One Namenode Is Far Too Limiting

The one namenode limit to Hadoop clusters limits write performance, making it unusable for high volume workloads.

Small File I/O Crushes Cluster Performance

The pressure on the namenode intensifies with small file I/O … which can be common when dealing with XML, far files, etc. HDFS stores small files inefficiently, leading to inefficient namenode memory utilization, a flurry of RPC calls, block scanning, throughput degradation and ultimately reduced application performance.

Flash Catches HDFS With Its Pants Down

So, Flash is the answer to solve for Hadoop performance? Well, no. Hadoop was never designed for Flash, and can’t perform the erase block management or advanced data reduction which is common now with Flash-native data storage architectures to make all-flash data lakes economically practical.

The ML Python Strangles The Elephant

Hadoop is written in Java and not designed to be programmed in interactive mode, whereas data scientists can access data interactively in a Spark shell using Python or Scala to easily manipulate and analyze data sets. As Python continues to rise in popularity (particularly for Machine Learning and Deep Learning), there is a growing preference for POSIX and GPU-accelerated interfaces in ML/DL… neither of which is provided by HDFS.

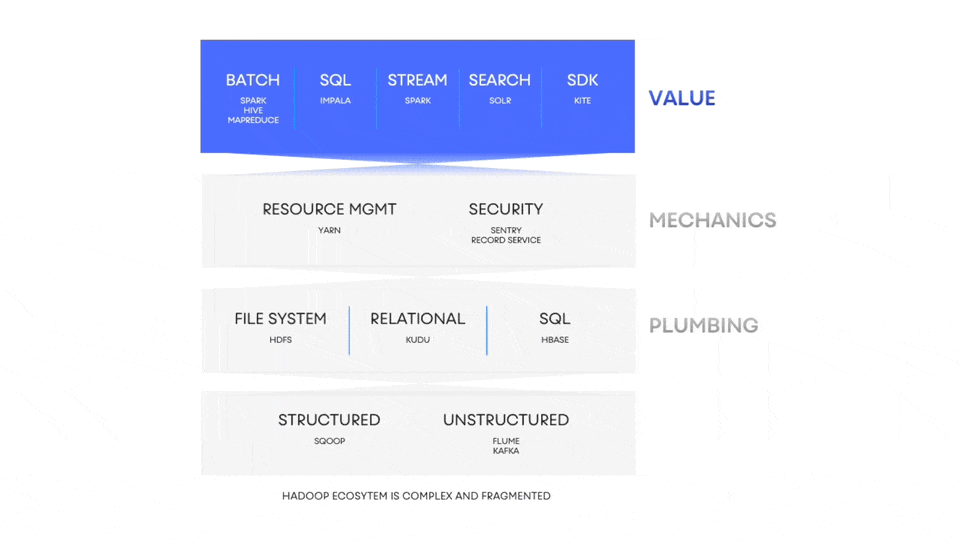

HDFS-Dependent Services Are Losing Traction

Even if the above wasn’t true, HDFS would still be losing relevance since the services that were once hard coded to HDFS are increasingly irrelevant. HDFS-centric tools such as Hive, MapReduce, Solr and Sqoop are simply not the tools du jour of a modern data scientist… when different computing frameworks lose relevance, so do their plumbing also lose relevance… Spark, Presto, Elastic, Kafka - these new tools can equally make use of S3 and NAS, eliminating the need for HFDS.

The picture is now much murkier than it was in the heyday of Hadoop.

The Data Lake is Dead! Long Live the Data Lake!

The concept of a storage abstraction for structured and semi-structured data was never a bad one, the problem was simply that HDFS was not developed as an enterprise storage technology. As a result, it was not designed to evolve with innovations in networking, storage media and protocols.

All the while, Amazon has popularized the S3 object storage interface as the modern alternative for big data workloads. Today, customers have a wide variety of public cloud and private cloud storage options to graduate from HDFS. This option is not only available for new workloads, the Apache community has also created the S3A client to enable S3 storage to be presented to Apache tools as if it were HDFS.

S3 architecture also brings dramatic enterprise storage concepts to the data lake…HTTPS-based access enables long-haul data access, authorization is easier thanks to S3’s bucket-based permissioning model, and resilience is better with versioning and locking and geo-replication.

Now, object storage is not one size fits all, and (as mentioned above) the surge in Python programming and GPU-accelerated computing has created a renaissance in file-based storage adoption… but, are all file storage systems created equal? Not really, because like HDFS, they are all based on the same 20-year old shared-nothing concepts applied on legacy storage media (HDDs).

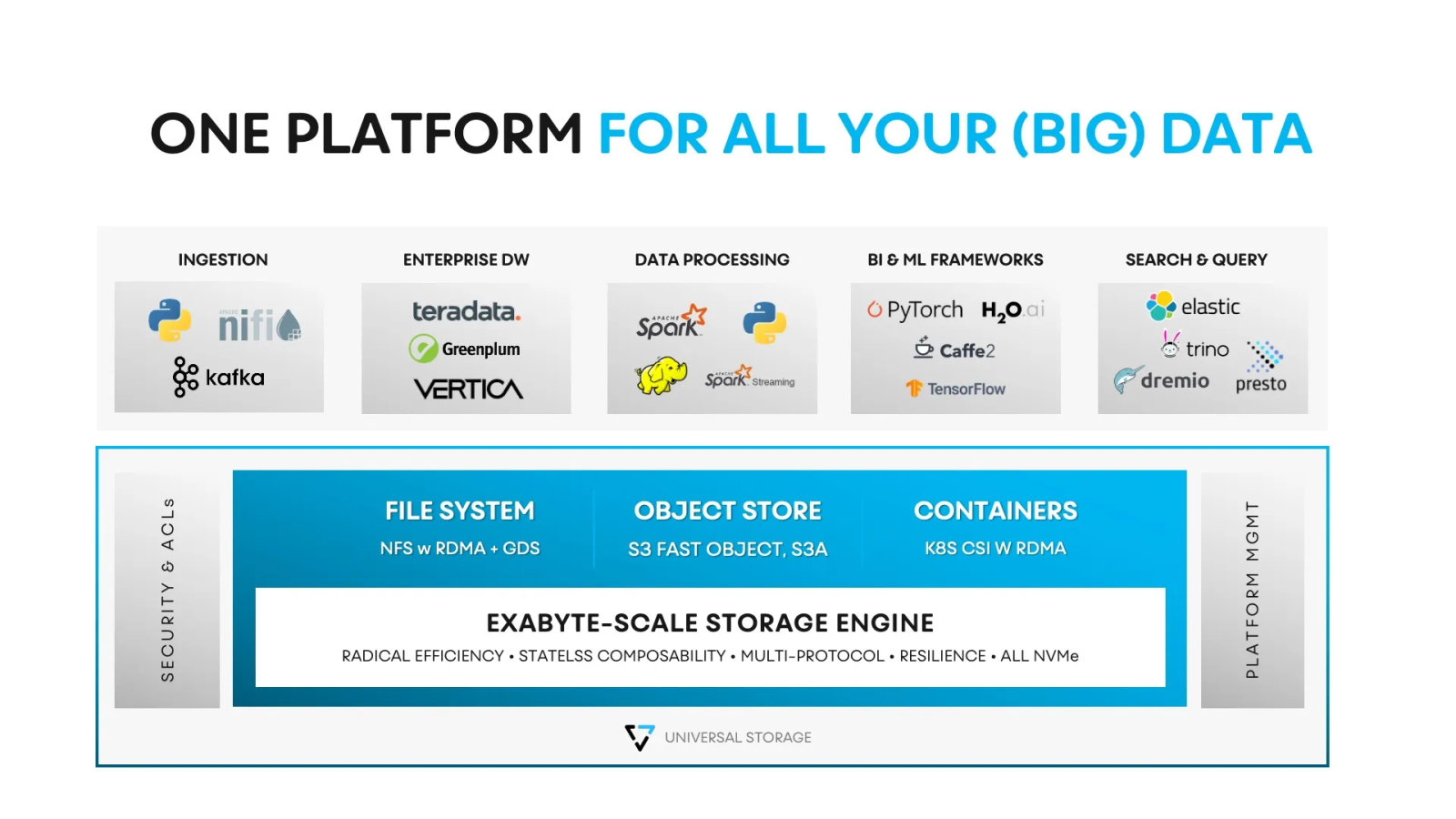

Enter VAST Data. VAST's new Disaggregated and Shared-Everything (DASE) architecture breaks all the tradeoffs of shared-nothing architectures. By leveraging next-generation low-latency NVMe SSD and networking technology, DASE disaggregates compute from the state of the cluster, while allowing each stateless containerized storage server to simultaneously access all of the data. The result is a highly scalable and highly affordable all-flash, file and object platform that allows you to consolidate your highly fragmented big data ecosystem.

Here’s a quick look at different interface considerations in 2021 when comparing generic industry solutions and VAST Data’s unique approach to data lake storage featuring many VAST exclusive NFS client side enhancements:

| HDFS | S3 | NFS | VAST Data |

Supports Apache Toolsets | Yes | Yes | No | Yes: VAST S3 works with S3A |

Optimized for Python | No | No | Yes | Yes: NFS+RDMA for DAS Speed |

Optimized for GPU | No | No | No | Yes: GPUDirect Storage Enabled |

Multi-Protocol Namespace | No | No | No | Yes: NFS & S3 MultiProtocol |

Distributed Metadata | No | Yes | Yes | Yes: scales to 10,000 Machines |

Optimized for Small Files | No | No | Yes | Fast NFS & S3 At Any I/O Size |

Built for Hyperscale Flash | No | No | No | Yes: Radical Flash Efficiency |

Global Data Compression | No | No | No | Yes: VAST’s Similarity Reduction |

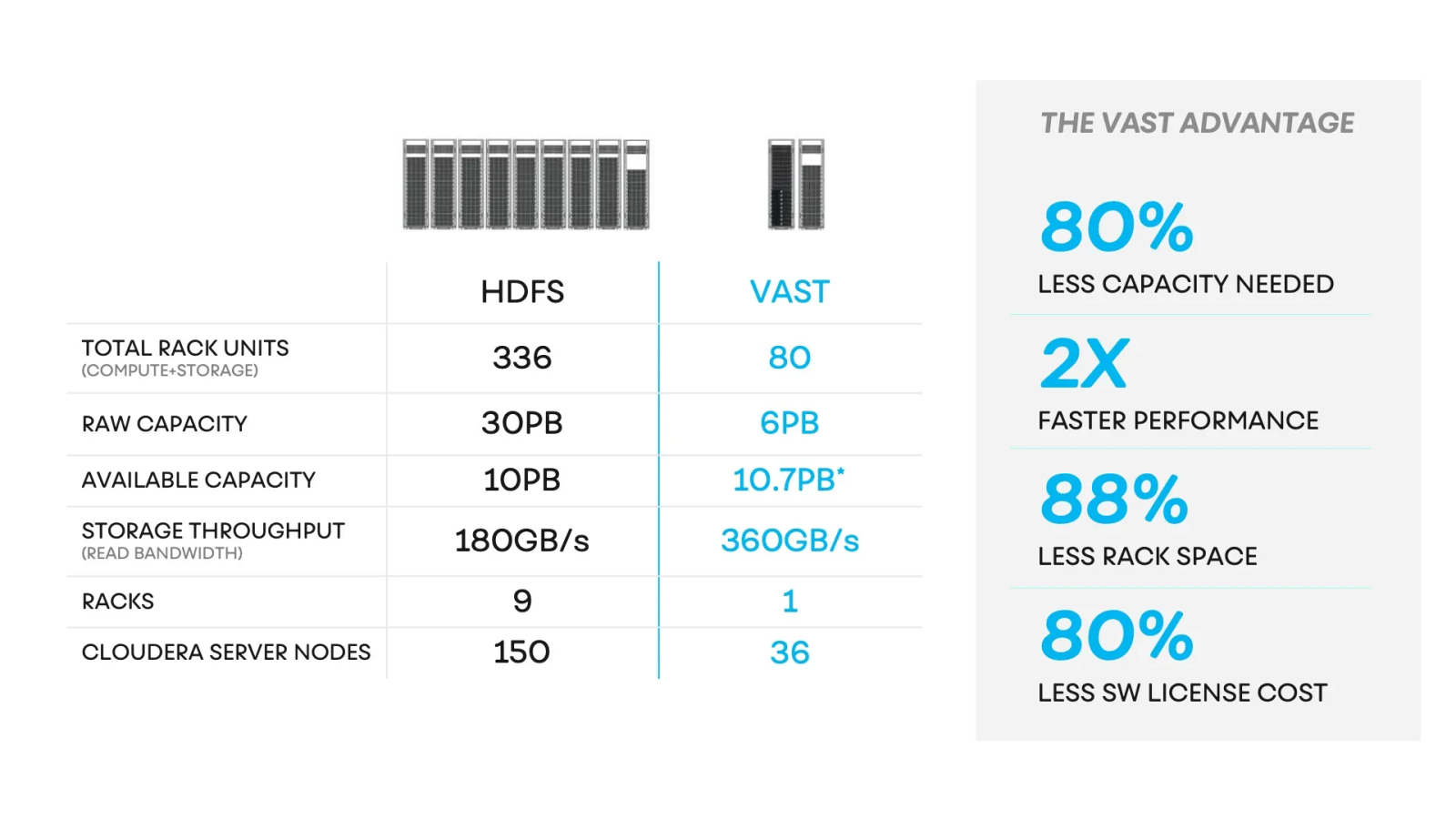

Enterprises ultimately get a solution which saves them both in terms of big data pipeline complexity (you don’t need multiple systems to store different types of data) as well as data center HW. When compared to what’s possible with VAST, the delivered solution to customers looks very different. This VAST vs. HDFS puts this savings into perspective:

Change is the only constant when it comes to big data and data science. Unfortunately, innovation doesn’t stop to wait for customers to catch up... and by future-proofing your data and data infrastructure, you can save your organization from future headaches as they reconcile this constant change. VAST combines fast file and fast file access on an enterprise all-flash platform that redefines the economics of flash and makes it practical to build all-flash data lakes for ALL of your big data ecosystem, making the transition to Artificial Intelligence and Machine Learning effortless.

Don’t take our word for it…learn how Agoda, one of the world’s leading travel websites, optimizes their customer experience by unlocking access to vast reserves of big data using the VAST Data Plat here.