In the HPC realm, the Texas Advanced Computing Center (TACC) is known for deploying some of the biggest and baddest systems in the world. Systems like Frontera, which was formerly #5 on the TOP500 list of the world’s fastest computers. Or Ranger, which had the famous Sun Microsystems Magnum InfiniBand switch that had longhorns mounted to the top… a switch that can now be found in the Computer History Museum.

As an aside, you can mount longhorns on virtually any piece of IT equipment, car, bedpost or household pet, just visit skullbliss.com! 🤘

OK, back to being serious and talking about data. For almost two decades, TACC has been powering these machines with the Lustre File System. While Lustre is a popular option for HPC centers that work to balance scale and affordability, its complexity and periodic fragility also has reinforced the “no pain, no gain” mentality that is commonly found in scalable HPC and AI computing.

In 2022, Dan Stanzione (Executive Director, TACC) gave a talk at the Rice Energy HPC conference TACC that was reported on by HPCWire to discuss their scaling plans for their first ~exascale class system, Horizon. While we were having some conversations with TACC at the time, I was surprised to see us called out as a potential option for such a large system. In 2023, the TACC team returned to deliver two follow-on talks at Rice:

In talk 1, Dan outlines how the price of flash infrastructure (with a side of revolutionary data reduction) has dropped to the point where TACC can now consider unifying their /scratch tier and /nearline tier into one bigger and faster tier

In talk 2, Tommy Minyard (TACC Director of Advanced Computing Systems) then discussed their recent storage explorations and some of the things that “pleasantly surprised” them about VAST

Today, we’re excited to announce that TACC has selected VAST as the data platform for Stampede3, their next generation large-scale research computer that will power applications for 10,000+ of the United States’ scientists and engineers. The Stampede purchase precedes selection for their next really big system, which will likely be announced later this year.

For the rest of this blog, I’ll outline the three critical factors that led TACC to choose VAST. Before that, here’s a quick video that dives into Tommy’s thoughts on I/O for these systems.

OK, now to the back story.

First, it’s important to point out that pain has a real price. In the video, Tommy starts his I/O section by pointing out that file systems have been the most significant contributor to system downtime, which led them to look beyond Lustre for new options. Today’s supercomputers are not cheap… customers can spend over $100M on systems that are expected to be fully operational in order to realize their value. And downtime costs… not just in admin and user frustration, but it also has an explicit cost in the form of diminished supercomputer system value and lost opportunity cost as people wait to run their codes.

If you synthesized all of the links, you’ve got DAOS, BeeGFS, Weka and VAST in the mix as TACC considered their options beyond Lustre. VAST was the only solution without a bespoke parallel file system client… instead providing a simple NFS-based solution which was perceived as the option that would perform the least (just reflecting the classic anti-NFS bias in HPC).

So the TACC team got to testing.

Test #1: Data Reduction

After loading heaps of scientific (and pre-compressed) data into the VAST system, TACC was pleasantly surprised to find an average of 2:1 data reduction across their data. This, coupled with VAST’s approach to leveraging low-cost QLC flash and our new low-overhead data protection led TACC to conclude that they could consolidate their high-performance flash plans with their lower-performance nearline storage plans and instead plan a single all-flash system that eliminates data migration between tiers and brings more acceleration by making the entire active data set flash-based. All-flash, of course, helps as TACC transitions to supporting more AI workloads since these applications are heavy with random read operations, and random reads hate HDD storage.

Test #2: Application Scaling and Performance

Enter Colin. Colin is a researcher at CMU who studies nuclear physics and runs simulations on TACC’s systems. I don’t know all of the details about how his code reads and writes data, but I’ve come to understand that his ‘nuclear codes 🙂’ play hell with scalable file systems. So, TACC uses Colin’s code as a litmus test for how file systems can play under the most stressful and pathological conditions.

Test #2.1 - does it work?

Upon 1st pass, VAST is the only new system that can stand up to the load. Everything else fails.

Test #2.2 - no really, does it work?

After much experimentation and optimization with Lustre, TACC has managed to scale Colin’s code to 350 clients, before the Lustre metadata server falls over. So, at this point TACC decides to scale up to an equivalent level of client scalability on VAST. The result? A. VAST scales fine to 350 clients, now neck and neck with Lustre in terms of scaling B. At equivalent client scale, Colin’s code is running 30% faster on VAST than Lustre. Whoa.

Test #2.3 - whoa. so, where does it break?

Then TACC opened things up and started trying to break VAST with their Frontera supercomputer, which at the time was the 19th fastest supercomputer in the world.

… 500 nodes. Still running.

… 1,000 nodes. Still running...

… 2,000 nodes. All good!

… 4,000 nodes. OK, it still works. We give up…

The VAST system had made its point, and the power of VAST’s parallel DASE architecture as well as great engineering and QA has given hope to TACC that there’s now a new standard of scalability when metadata and data are now embarrassingly parallel across all of the VAST servers.

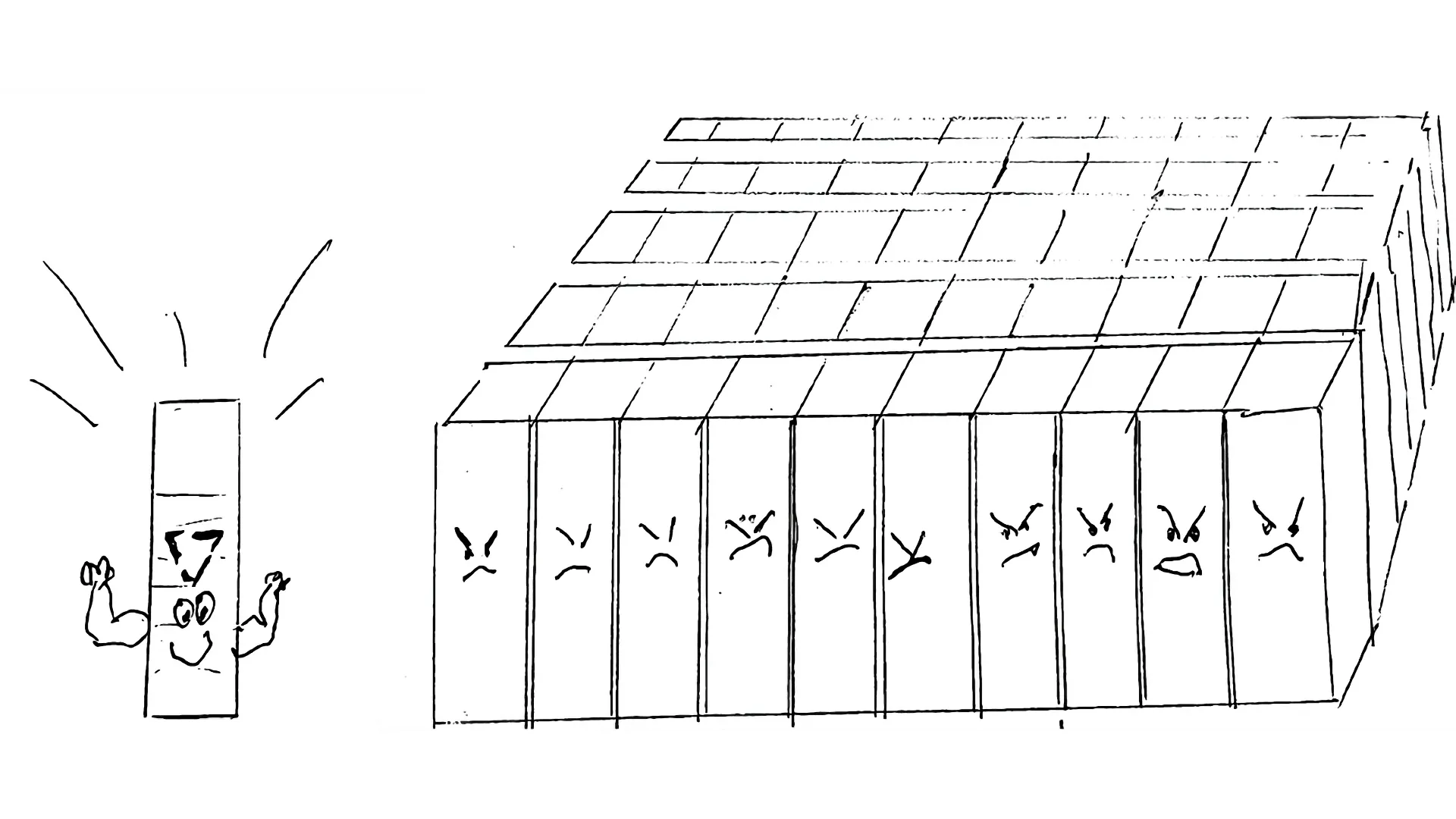

Fortunately, we were able to grab some CCTV footage during the benchmark to confirm that 20U of HW running VAST SW could stand up to 50 racks of Dell servers:

Test #3: Failure Handling

As TACC went to upgrade to a newer version of VAST-OS, the upgrade process failed as the system discovered a failed storage server. During this time, the system was left in a state where it was concurrently running multiple versions of our software.

So - the VAST support team coordinates an RMA and the Sales team calls to apologize for the headache. While we thought we’d get a brow beating, what we received back from TACC was congratulatory. What we learned in this process is that HPC file system updates are largely done offline (which causes downtime) and it’d be crazy to think about running in production with multiple versions of software on a common data platform using legacy infrastructure. Not only did the system not hiccup during a HW failure (thanks to our DASE architecture, performance only degrades by n-1), but it survived a bug we found in the software installer. No downtime. Barely any perceptible performance loss. What TACC found was a system that was more resilient to failures while dealing with applications of scale.

–

So, that’s the story. The pain vs. gain equation has been forever altered.

In the end, Stampede3 will kick off a bright partnership that’s forming between VAST and TACC, and we want to thank them for their support and guidance as we chart a new path to exascale AI and HPC.