For decades, datastores have been unaware of applications, and applications have been equally unaware of data events. The division between applications and data has resulted in fractional solutions to building data pipelines and a batch processing mentality which separates data streams from deep data analysis.

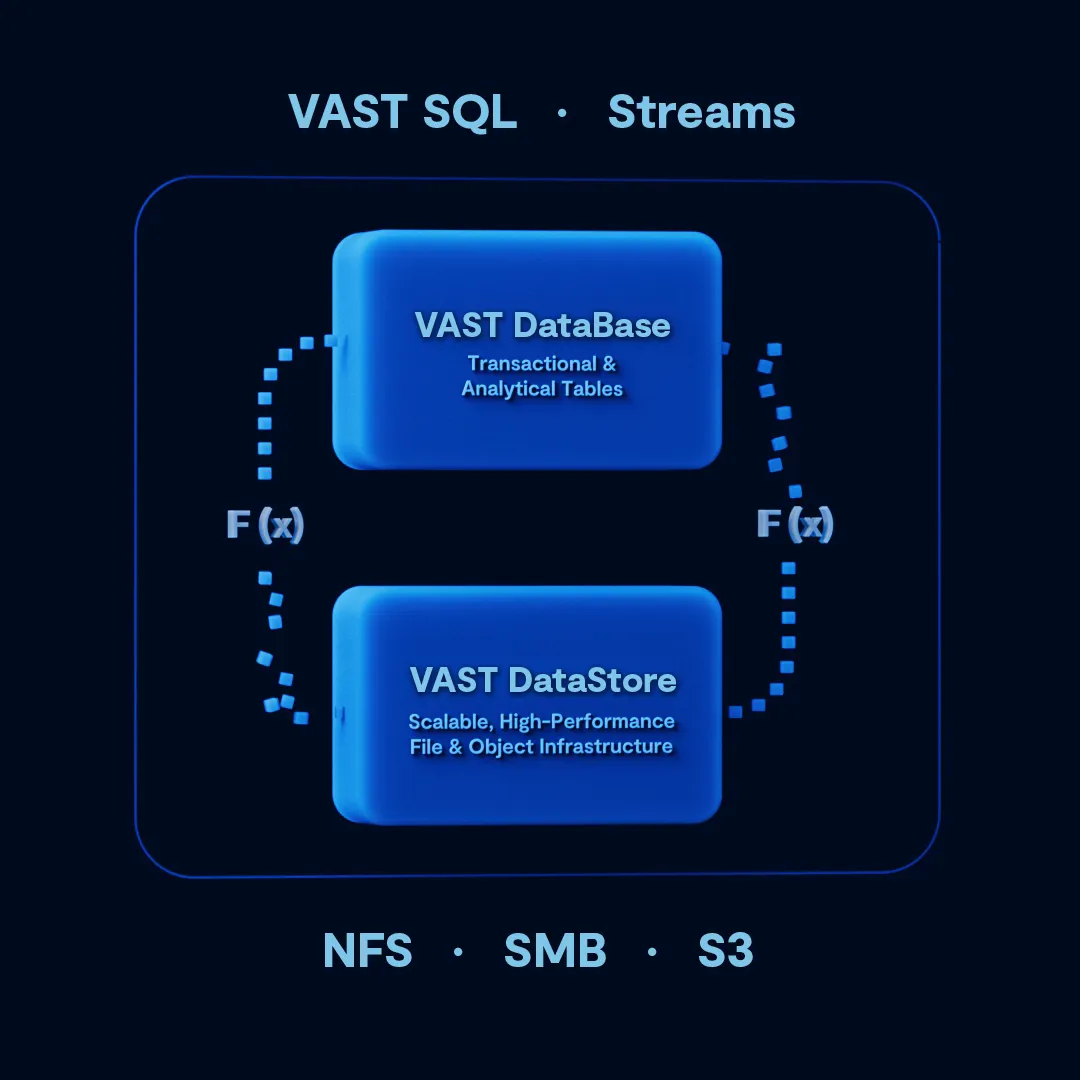

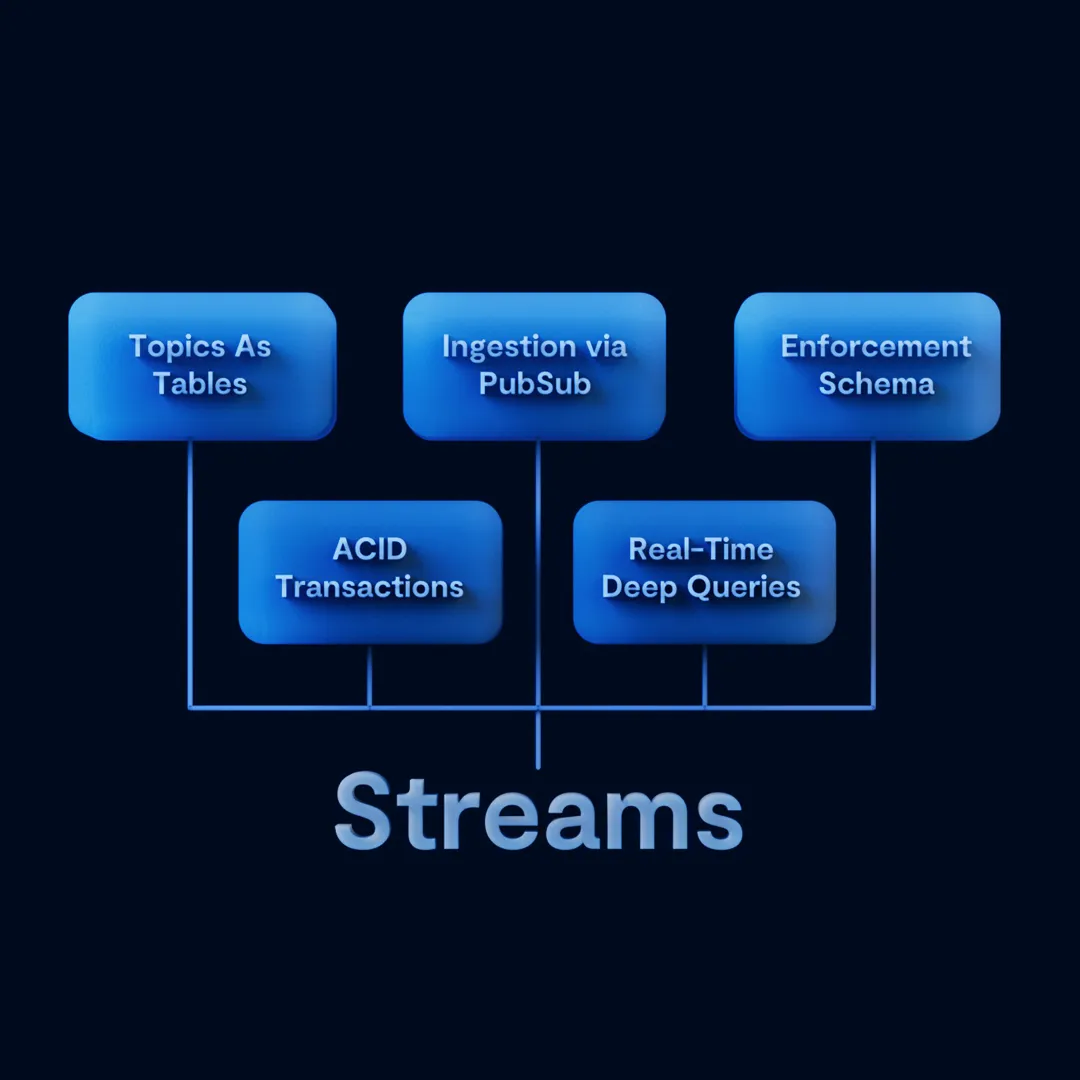

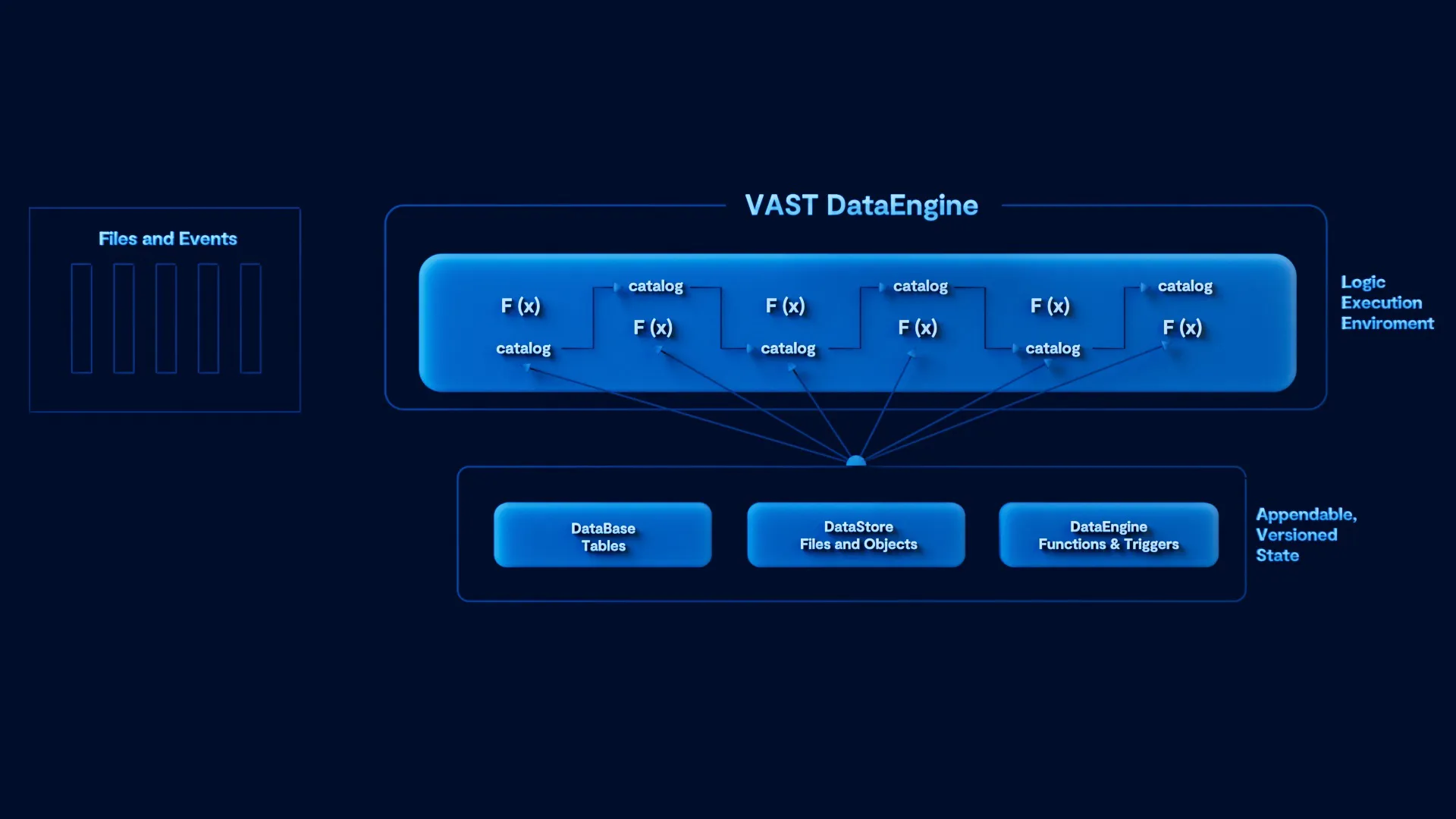

The VAST Data Platform aims to break the tradeoff between data streaming and global insight by engineering data processing and event notifications natively into the system.

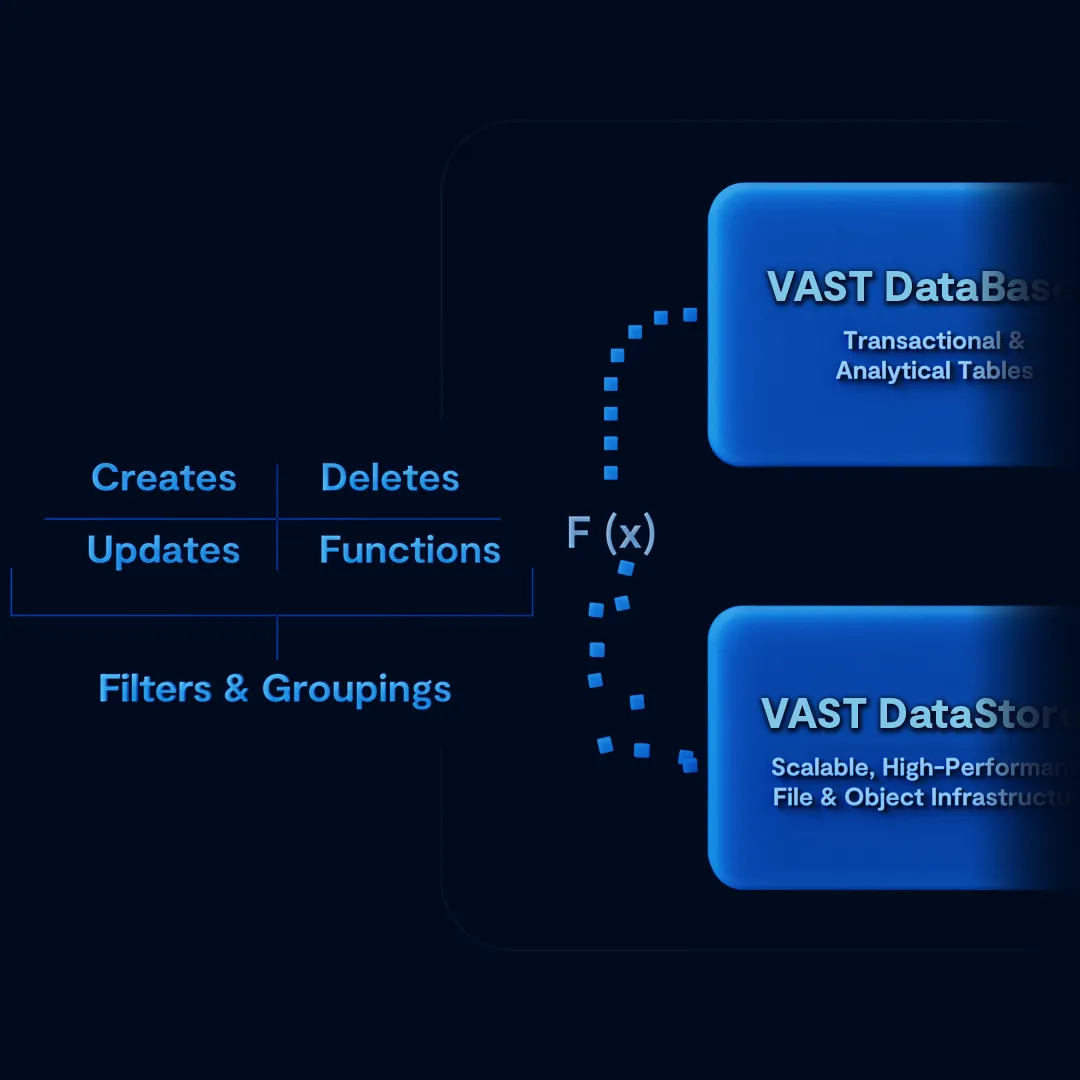

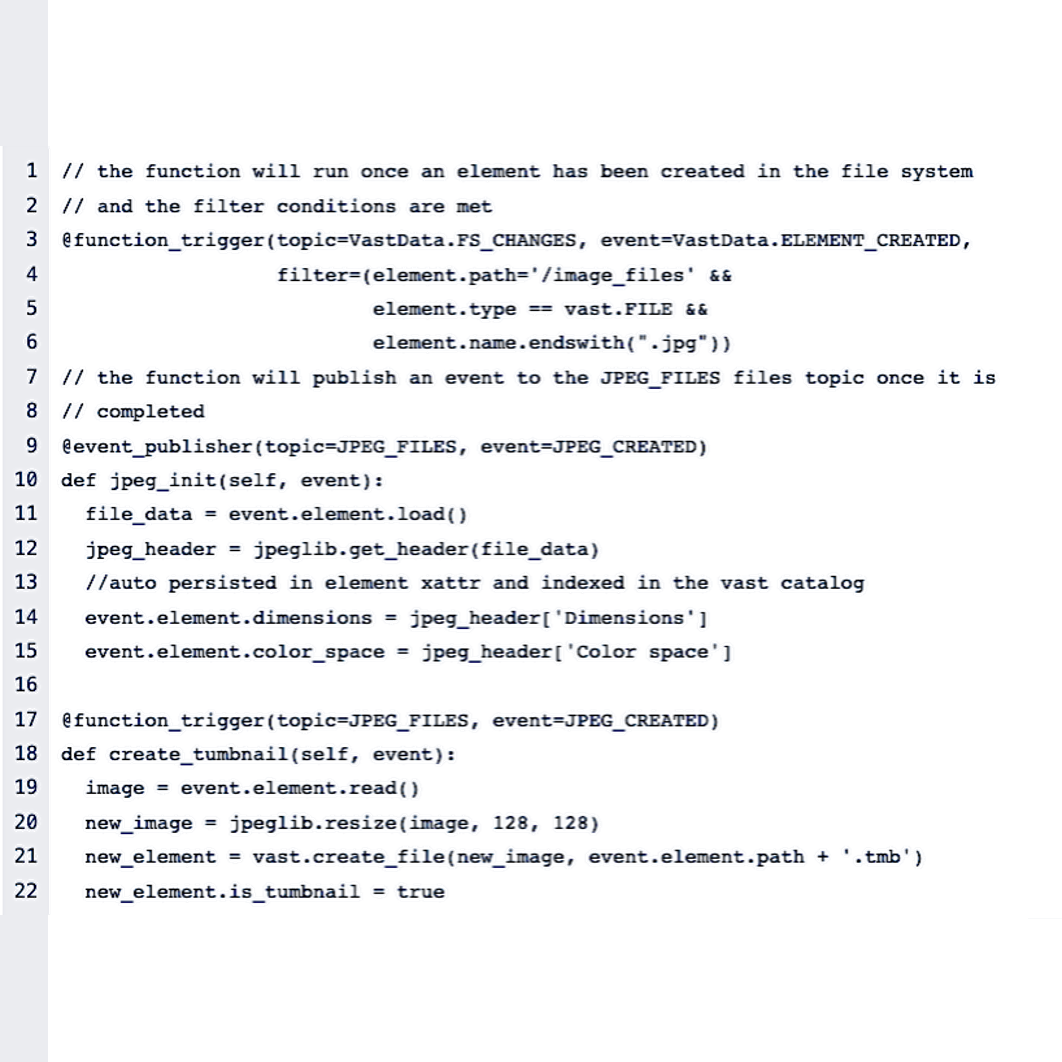

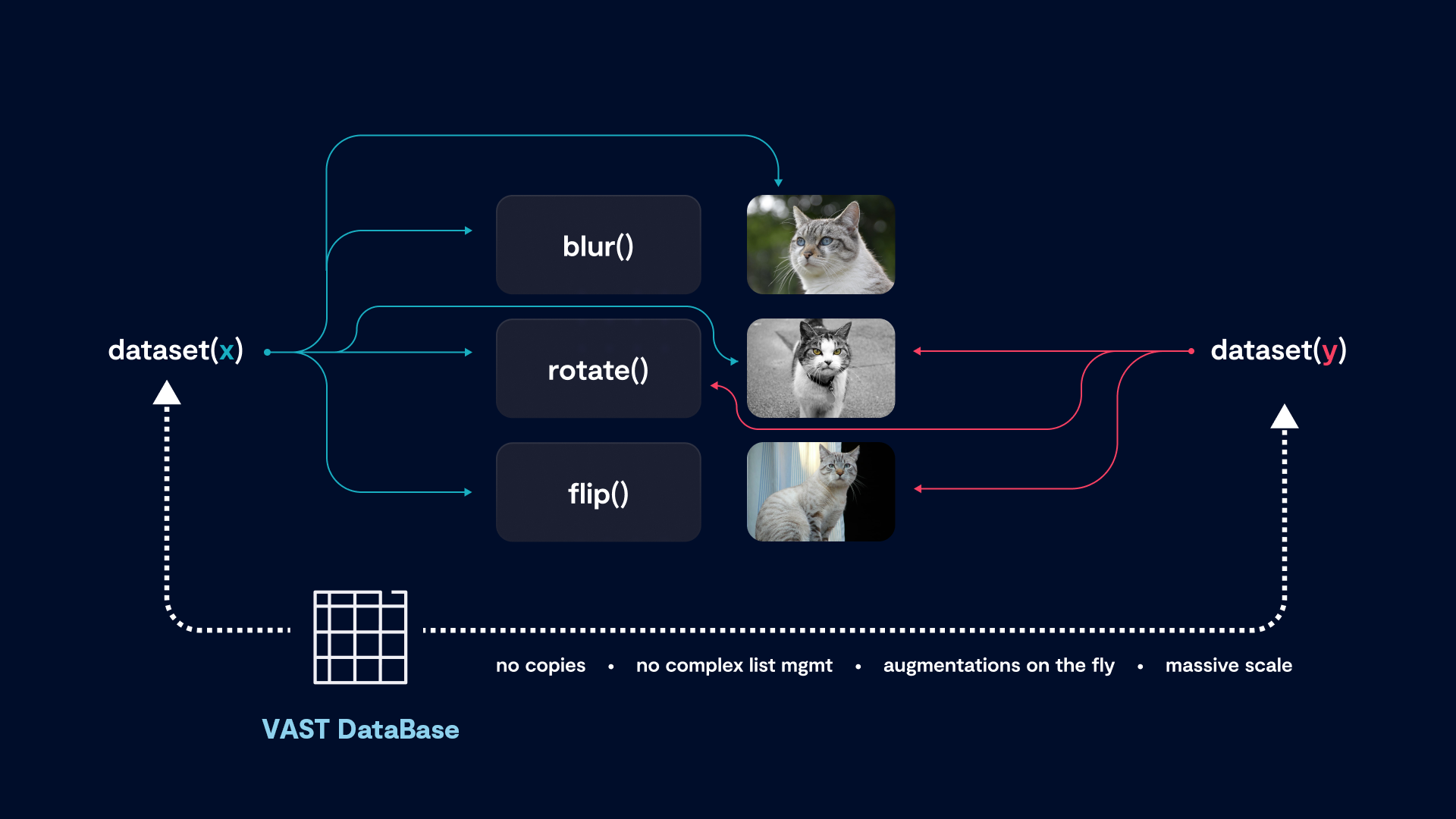

By supporting new types of data - functions and triggers – the VAST Data Platform makes data dynamic by adding support for procedural functions in the same way that JavaScript made websites dynamically interactive.

With the VAST DataEngine – data, and changes to data, trigger action, action is then performed on the data, and the system processes recursively forever. The Data Engine is the basis for perpetual AI training and inference and we hope will be the basis for the AI-powered discoveries of the future.